Evaluating Prompt & Model Performance in Klu

As you begin to utilize LLMs in a production environment, the need for an efficient system to manage and leverage the generated data becomes apparent. Klu allows you to easily import your existing data via UI or SDK, manage and filter feedback, and export data whenever necessary.

Create Test Cases

To enhance your model's performance, importing your evaluation data into Klu is straightforward.

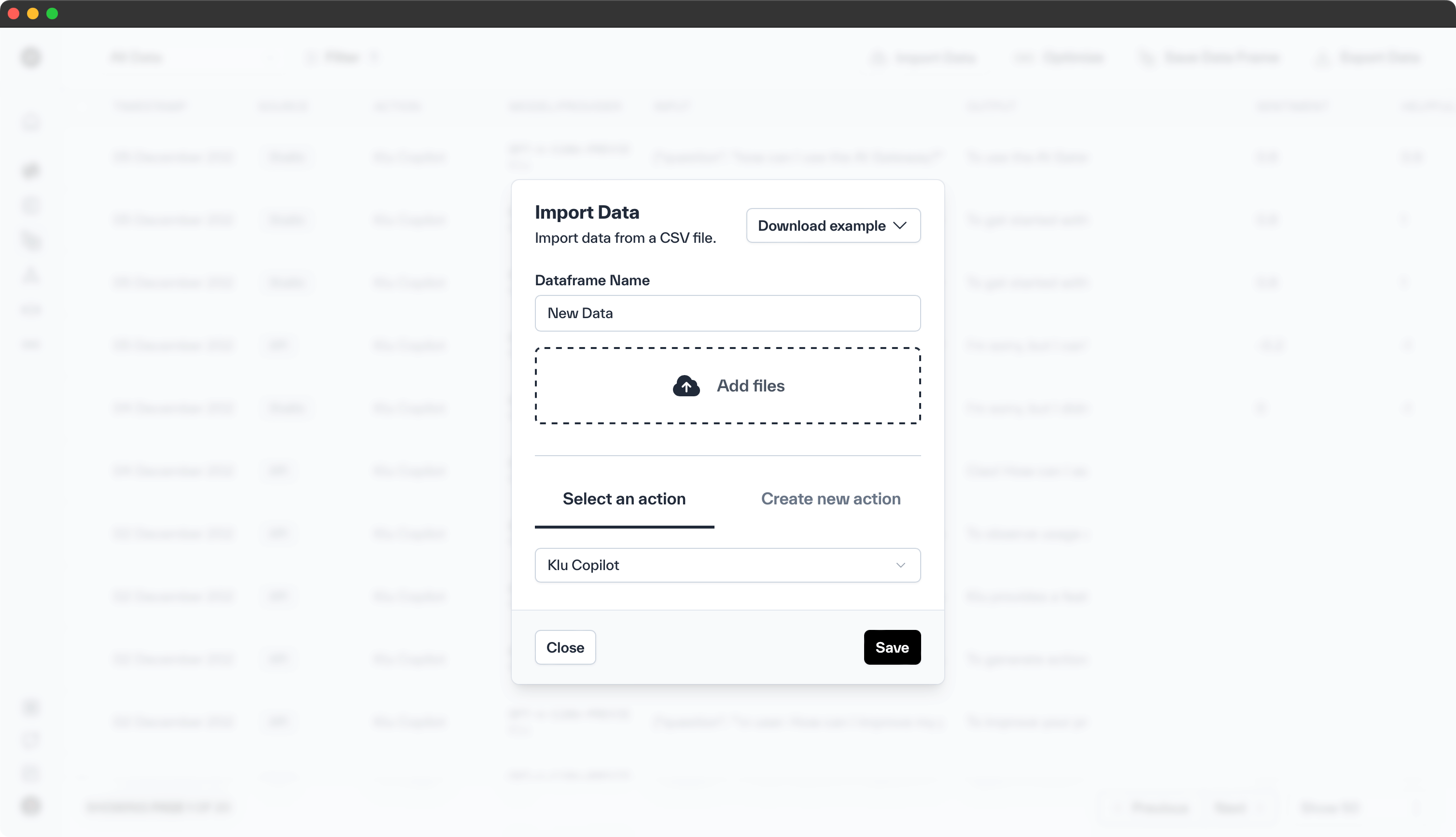

Importing via the UI

Go to the Evaluations section in your App and click on Import Evaluation Data in the header menu. For optimal compatibility, we suggest using the JSONL format, for which we provide easy-to-use templates.

Importing via the SDK

The Klu SDK simplifies the process of importing your evaluation data. Follow these steps:

- Initialize your Workspace and acquire your API key

- Generate a new App and Evaluation Action

- Specify the Evaluation Action GUID upon import

Evaluation Data Import

from klu import Klu

klu = Klu("YOUR_API_KEY")

prompt = "Your evaluation prompt"

response = "Model's response"

evaluation_action = "evaluation_action_guid"

evaluation_score = "Score based on your criteria"

eval_data = klu.evaluation.create(prompt=prompt, response=response, evaluation_action=evaluation_action, score=evaluation_score)

print(eval_data)

This process allows for the efficient integration of your evaluation data into Klu, facilitating model optimization.

Create Evaluators

Access the Evaluations section through the sidebar navigation in your App.

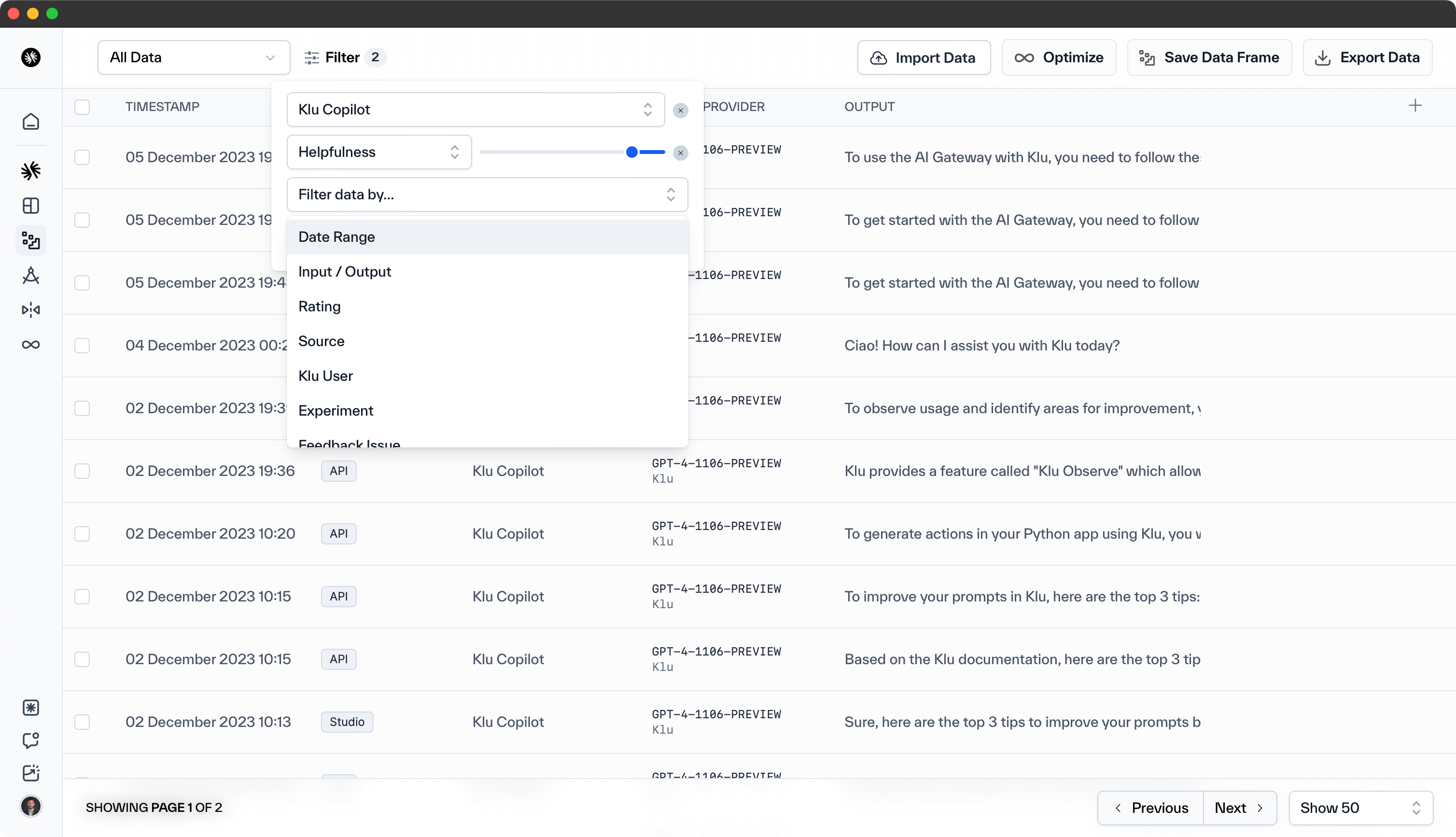

This area displays all the evaluation data points for your application, which you can filter to isolate specific datasets.

Klu currently supports these filters for evaluation data:

- Date Range: Select the period for the evaluation data.

- Evaluation Action: Identify the specific action associated with the evaluation.

- Prompt/Response Search: Find particular prompts or responses.

- Score: Filter by the evaluation scores, allowing for analysis of model performance.

- Source: Trace the origin of the evaluation data.

- Insights: Filter by metrics such as accuracy, relevance, or any custom metric.

- Klu User: Filter by the user or SDK key that submitted the evaluation.

- Evaluation Issue: Isolate data based on identified issues like inaccuracies or biases.

For long-term analysis or model retraining, you have the option to save your filtered dataset as a named Evaluation Set.

Run Evals

- Name

Feedback- Type

- Description

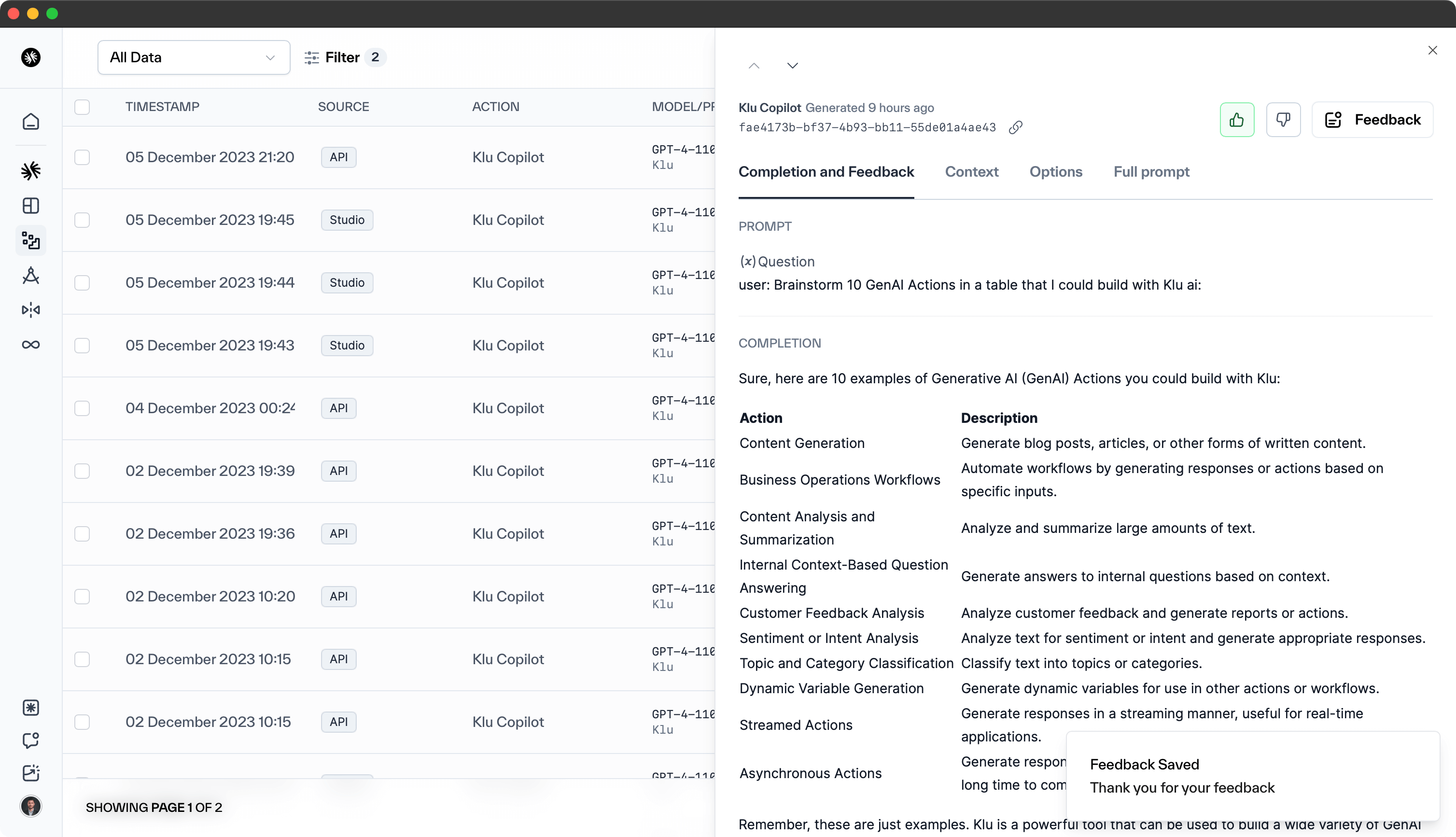

Klu enables you to give feedback on any evaluation data point. This feedback is crucial for refining and enhancing model outputs. Simply use the thumbs up or thumbs down icons in the app to indicate the quality of an evaluation.

The thumbs up and thumbs down icons in the evaluation section make it simple to rate an evaluation as positive or negative.

For more detailed feedback, such as editing responses, flagging them, or adding context, click on the icon to the right of the evaluation data point.

In the feedback modal, you can specify:

Evaluation Context- provide additional context for the evaluation, such as the scenario or parameters used.Model Issue- identify issues with the model's response, including inaccuracies or biases.Response Improvement- suggest improvements or corrections to the model's response.

These feedback mechanisms are invaluable for continuous model improvement and optimization.

Compare Performance

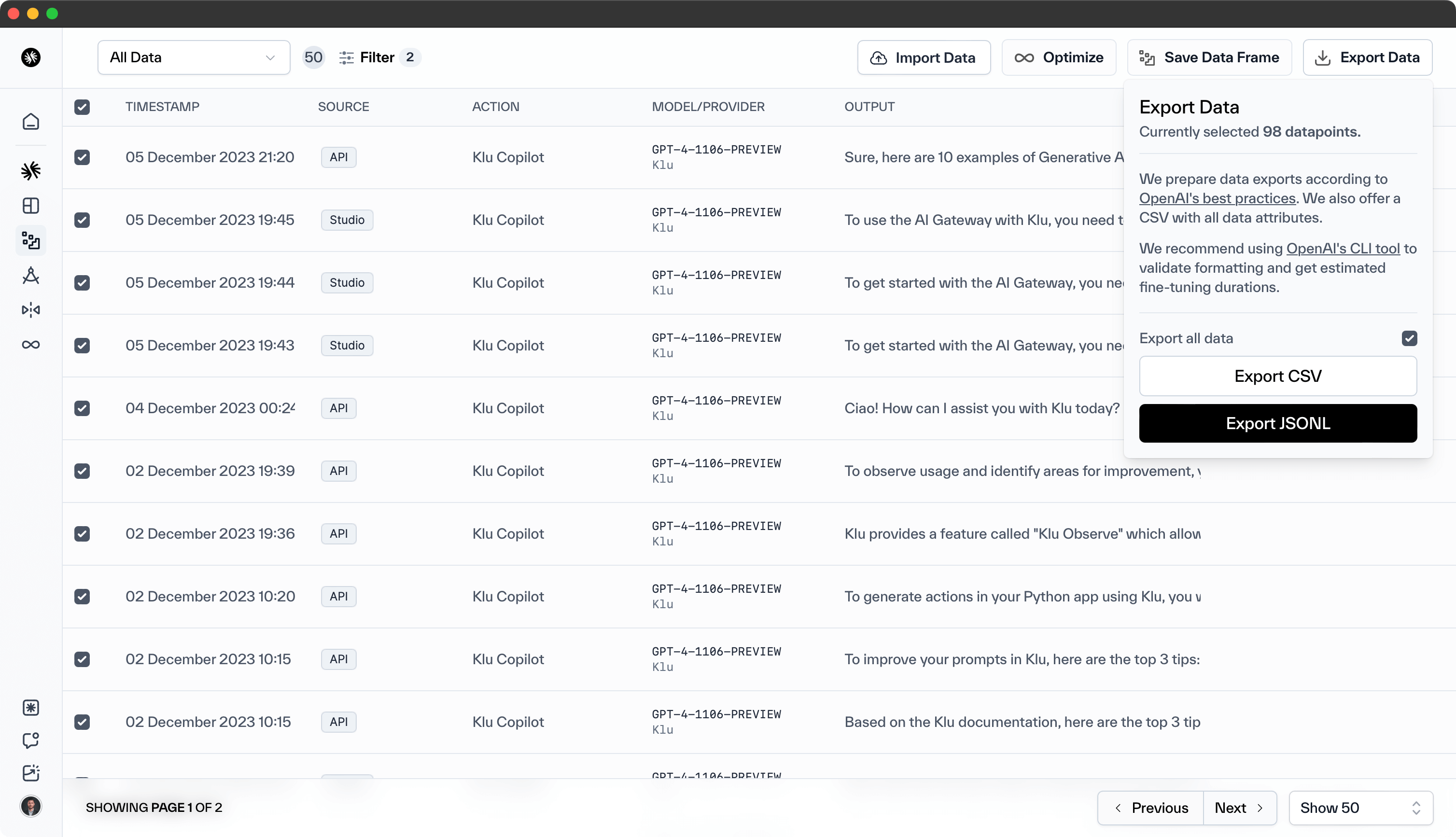

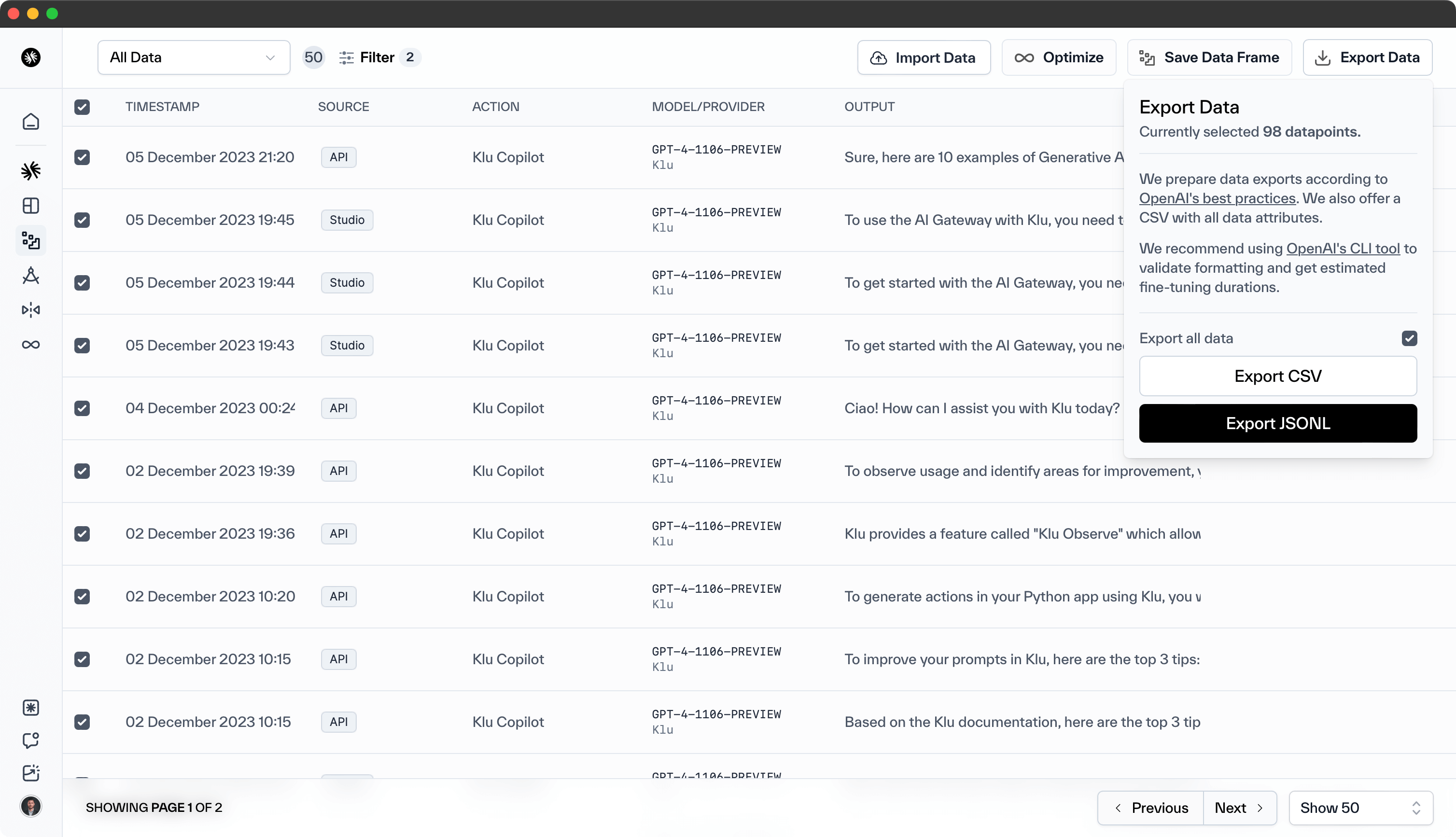

Klu empowers you to maintain control over your evaluation data, offering flexible export options when needed.

We provide two formats for exporting evaluation data:

CSV- for easy analysis in spreadsheet applicationsJSONL- for use in other systems, such as model retraining or further evaluations.

Exporting evaluation data is also supported through the API or SDK.

Real-time Monitoring

Klu empowers you to maintain control over your evaluation data, offering flexible export options when needed.

We provide two formats for exporting evaluation data:

CSV- for easy analysis in spreadsheet applicationsJSONL- for use in other systems, such as model retraining or further evaluations.

Exporting evaluation data is also supported through the API or SDK.